Isomorphisms between Time and Tone

This article was written and edited over two semesters at McGill. It was a central element of my studies during my semester-long internship at IRCAM. This article was subsequently published on their website, and can be found here

[Introduction]

The musical domains of tone and time are commonly distinguished when discussing sound art. Different cultures and their musical traditions can be discerned by the various perspectives and systems they employ when engaging with these domains. There is evidence to support that various musics from around the world exhibit structural similarities across their manipulations of tone and time. These similarities can be mapped in the form of isomorphic or identical models. While perceptually, these domains appear strikingly different, the existence of such isomorphisms hints at the possibility that they share fundamental commonalities in the ways they are processed cognitively.

Several articles explore these structural similarities from different perspectives (Pressings 1983, London 2002, Rahn 1975, Stevens 2004, Bar-Yosef 2007, etc.), alluding to a myriad of possibilities for connection. In Western music, electronic music brought about considerations of time and tone as interchangeable concepts. Most notably, Stockhausen (in Structure and ExperientialTime and The Concept of Unity in Electronic Music) and Grisey (in Tempus Ex Machina). The book, Microsound by composer Curtis Roads, tackles this subject and provides helpful references to other composers in this vein.

The goal of this paper is to identify and discuss models which can effectively relate various musical phenomena across the domains of time and tone. I will explore the theoretical understanding for such isomorphisms, both reviewing established and introducing new models. Beginning with a brief review on select physical properties of sound, I will explore the perceptual distinction which separates these two domains. This understanding will inform the investigation and evaluation of subsequent theoretical equivalences. I will discuss the pertinence of models by comparing their ability relate unique or multiple musical phenomena. Evaluation will be judged through the perspectives of perception/cognition, relevance to existing music (general musicology), and through the functional limitations of the models.

[The Root Issue]

When considering the perceptually disparate concepts of time and tone, it is helpful to begin at the instance in which both are physically identical: pulsation and frequency. A frequency is the rate at which air pressure cycles in and out of equilibrium. A pulsation is an onset which recurs at a single constant duration. For purposes of clarity, this paper will briefly ignore the specific onset/offset amplitude envelopes and refer to each unit of pressure disturbance as a grain- a sufficiently brief sonic impulse. These definitions can be merged by claiming that both frequency and pulsation are periods of pressure disturbance (or grains); regarding pulsation as a slow frequency (<20Hz) and pitch as a fast pulsation (>20Hz). For purposes of clarity when discussing perceptual phenomena, this paper associates pulsation with rhythm/time and frequency with pitch/tone.

In order to begin, it is first necessary to imagine how a single physical stream of events manifests into the two disparate perceptions of tone and time. This can be done through a simple example. Take a chain of identical grains pulsating steadily at a rate of five times per second (5Hz). This chain will be heard as five discrete points within each passing second. However, as the grains pulse more quickly than 20 grains per second (20Hz), the perception of an individual grain is no longer possible. Where each individual grain was once identifiable, instead the inter-onset interval (IOI) between grains is “listened to” and abstracted into a tone. The sensation of pitch occurs when grains are replaced by the perception of a temporally-abstract, “atemporal” tone. In other words, discrete grains pulsating at 500Hz are not perceived as 500 events but as one event— a single, static tone. While it is important to disclaim that the auditory system does not operate in this manner, the current example merely serves to clarify the conceptual distinction.

By manipulating the grain-rate (i.e. frequency) of a physical signal, the perceptual difference that separates pulsation and frequency is identified: the abstraction of time. Pulsations that are perceived as “faster” or “slower” in the temporal domain are transformed into a tones and perceived as “higher or lower” in the pitch domain (or other associations, depending on the culture [1]). This abstraction of time urges the paper, from hereon, to consider all time-related events as belonging to the rhythmic domain. For clarity, the term onset and offset is extended to include even the most unclear attacks and decays.

The paper arrives at the basic, perceptual disconnect between the two musical domains: the perception of time in pulsation and the perception of tone in frequencies. At slow cycle durations (pulsation), time between onsets is consciously available and onset/offset pairs are distinguishable; at fast cycle durations (frequency) time is not perceptible and individual onset/offset pairs are not accessible. The paper will continue to discuss time and tone as two distinct musical domains.

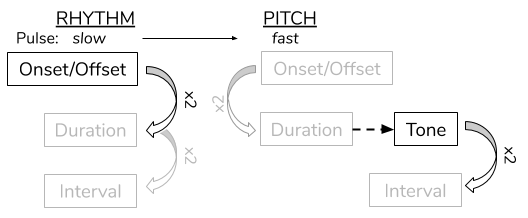

Figure 1

The paper arrives at the basic, perceptual disconnect between the two musical domains: the perception of time in pulsation and the perception of tone in frequencies. At slow cycle durations (pulsation), time between onsets is consciously available and onset/offset pairs are distinguishable; at fast cycle durations (frequency) time is not perceptible and individual onset/offset pairs are not accessible. The paper will continue to discuss time and tone as two distinct musical domains.

Given this discrepancy, it is important to question how it remains possible to search for isomorphisms between these two, non-isomorphic domains (Figure 1). While it is clear that there are vast perceptual differences between the two musical domains, it is interesting to observe that both domains are often approached through proportions (or “intervals,” above). It can be shown that similar cognitive structures serve to compare units within both the temporal and pitch domains. [2] Different musical traditions impose abstract, proportional “maps” onto these domains in the form of tonality, meters, scales, rhythmic patterns, etc. The prevalence of proportional systems across both domains is what encourages this paper to consider the relationship between them.

It is then pertinent to consider the units in either domain which facilitate these proportions, as well as how they can be related. This paper proposes two possibilities for which units may be equated. The first explores durations, fundamental to both pitch and rhythm, as the units of these models. The second, later on in this paper, relates the perceptible members of each domain (bold-marked in Figure 1).

[Basic Proportional Relationships]

The harmonic series is a common framework for evaluating integer-based proportional relationships between frequencies. A harmonic series is created by taking a root frequency and multiplying it by incrementally-ascending integers. Instead of discussing each harmonic as a fixed frequency, this paper will use the harmonic series relatively in order to evaluate any root value. For pulsation and frequency, the “unison” and “whole note” are taken as references and multiplied by progressively-ascending integers.

In Figure 2, the bottom row of the left chart represents the root, and the rows above represent integer-multiples of the root. Each row contains three columns containing the pitch, rhythmic, and integer interval with respect to the root. In this first example, the paper limits its consideration to a single row of the harmonic series at a time.

An interesting equivalence suggested by this model is between octaves and double time. Due to the fact that pitch tends to operate at proportions ≤ 0.5 and rhythm ≥ 0.5, it is precisely this 0.5– or 1:2 ratio– in which the two domains share a strong quality. This shared quality will be considered in greater detail later on in the paper.

Considering the connection between tone and time through single harmonic ratios does not prove to be very powerful for a number of reasons. The first disparity is that our “range of hearing” in the temporal domain is comparatively smaller than that of the pitch domain. The human range of beat perception occurs at 0.5-4Hz.[3] As a pulse grows to a length of 2 seconds, events dissociate and a pulse is undetectable. As a root is subdivided beyond 20 times per second, the pulse converts into pitch. This rhythmic “range of hearing” from 2 seconds to 1/20 seconds forms a ratio of 1:40. Pitch, by contrast, can go from 20Hz to 20,000Hz[4] – 25 times greater than rhythm.

Figure 3

The second disparity discernible in this model arises from a musical perspective. In most musical cultures, scales partition an octave (1:2 ratio) into at least 4 parts. This means that, in the tonal domain, the root (or “tonic”) is able to form complex, non-integer ratios with simultaneous or subsequent pitches. Rhythm, however,1) more commonly features much larger intervallic leaps, and 2) more often features low-integer ratios ( 1 : x , where x is a low integer) and subdivide by x recursively.

It is hypothesized that these rhythmic features are due to the cognitive tendency to relate all temporal events to a perceived beat (i.e. rhythmic entrainment) [5] combined with a preference for events to be grouped recursively in units of 1, 2, and 3 (i.e. subitization).[6] Essentially, a beat will be divided into a few small, usually even-spaced parts. Those parts are then similarly divided again. This process repeats until a strong preference for the ratios highlighted in Figure 2 is observable. In the figure, blue columns are products of 2-subdivisions (tuplets), yellow columns are products of 3-subdivisions (triplets), and green are products off 2- and 3-subdivisions. Pitch, however, does not exhibit these same tendencies.

Figure 4

x : (x+1)

Above, the paper considered one row of the harmonic series at a time. In this example, the paper looks at two adjacent rows occurring simultaneously (cross-rhythms and dyads). Two adjacent rows form phasing ratios: x : (x + 1) . W hile these ratios offer a very limited window to the harmonic series, they present characteristics useful for drawing isomorphisms.

Considering phasing relationships within pulsation and frequency has several advantages. First, two cycles going in and out of phase alignment in a periodic fashion results in a perceivable, higher-level structure. This higher-level structure is perceivable ubiquitously across both temporal and pitch domains. In time, the higher level is felt as a longer temporal cycle. The leftmost depiction in Figure 5 demonstrates how- at slower pulsations- a higher level arises from the oscillation between minimal and maximal alignment of the grains. In tone, the higher level is perceivable as a periodic amplitude modulation, called “beating.”[7] The rightmost depiction demonstrates how the amplitude envelopes of each grain combine, forming a “hairpin” shape in the amplitude. This higher level can even be perceived as a separate pitch if the cycling frequency is sufficiently high.[8] Simply put, every time two phasing pulsations or frequencies are played, the root (:1) is identified such that the initial x : (x + 1) becomes x : (x + 1) : 1 .

Figure 5a

Figure 5b

The next advantage of this approach is the ability for pulsations in the temporal domain to employ ratios beyond 1 : x . With the opportunity for simultaneity, it is possible to identify common ratios between the pitch and rhythm domains such as 2:3 a nd 3:4. These polyrhythms are extremely common across musical geography.[9] The pitch equivalents of these ratios depict themselves through the just-4th and just-5th dyads– also extremely common intervals. Perceptually-speaking, all phasing polyrhythms x : (x + 1) in music generally have the same perceived “beating” quality, meaning that 6:7, for example, resembles 17:18 simply because they both phase. [10] Theoretically, there exists isomorphisms between any two phasing polyrhythms and dyads. From a performance perspective, however, the fact that musicians experience much greater difficulty when attempting higher-integer phasing ratios in polyrhythms than in dyads argues against this general comparison.[11]

x : (x+n)

While discussing performance of polyrhythms, it is interesting to note that the ratio 2 : x - which manifests itself in many examples within the pitch domain- is very easy to produce rhythmically. In an attempt to explore more complex intervals within the temporal domain, the paper now considers x : (x + n) for n > 1 . In other words, considering simultaneous non-adjacent rows of the harmonic series.

Above, it was shown that n = 1 yields a simple cycle away from and back to alignment. The previous section, which considered x : (x + 1) yielded one cycle per alignment. For n > 1 however, the two streams of x and (x + n) must go through several, n - rounds of phasing before re-aligning. Put simply: while approaching alignment, both streams arrive asynchronously and create an imperfect alignment. They must then cycle through additional round(s) of phasing before realigning synchronously. T his process is visible in Figure 6, which considers and n = 3 for a ratio of 28 : 31. Alignment is depicted by the right-most solid box, whereas faux-alignments are depicted with dotted boxes. Clockwise, the first box depicts a global alignment, followed by a local maximum where the light-green 28-stream is lagging, and finally a local maximum where the dark-green 31-stream is lagging. This example demonstrates how the number of phases between global maxima is equal to n . T his process is visible in Figure 6, which considers and n = 3 for a ratio of 28 : 31. Alignment is depicted by the right-most solid box, whereas faux-alignments are depicted with dotted boxes.

Figure 6

Clockwise, the first box depicts a global alignment, followed by a local maximum where the light-green 28-stream is lagging, and finally a local maximum where the dark-green 31-stream is lagging. This example demonstrates how the number of phases between global maxima is equal to n . Alignment is depicted by the right-most solid box, whereas faux-alignments are depicted with dotted boxes. Clockwise, the first box depicts a global alignment, followed by a local maximum where the light-green 28-stream is lagging, and finally a local maximum where the dark-green 31-stream is lagging. This example demonstrates how the number of phases between global maxima is equal to n .

As values x and (x + n) increase, faux-alignments become more closely aligned. At high enough values, they become indiscernible from total alignment. In these cases, the beat frequency is actually perceived to be n , regardless of whether or not n is the common factor of both streams. Ultimately, the frequency of a beat between any two rows in the harmonic series is their difference, n . T his phenomenon is perceivable in both low and high x-values. Though, as mentioned, faux-alignments are less convincing beat-replacements in low x-values. Figure 6 is an example of a phasing pulsation with a beating pattern that, at varying tempi, can either be perceived as one long beat or three faster beats. Once more, from a musical perspective, the relative difficulty of producing such complex polyrhythms- and their relative rarity to tone production- argues against this comparison.

A disparity highlighted by this model appears in the temporal domain, where one pulsation is cognitively elected as the tactus- or reference pulse- in polyrhythms.[12] When one pulse is entrained to, all other pulses are heard relatively and subordinately to the entrained pulse. This is most likely caused by the perceptual inability to entrain to multiple pulses simultaneously.[13] Pitch perception, unlike rhythm, does not automatically produce a hierarchy. In non-tonal contexts, both members of a dyad are equal with only a slight preference for the faster frequency.[14]

As a final consideration in this section, t he paper considers 2+ simultaneous adjacent proportions. While it is common for frequencies to form ratios with three or more values which do not share common factors (e.g. dominant: 4:5:(6):7), it is virtually impossible to find a polyrhythmic equivalent in music or psychology literature. Similarly to our previous discussion on the “range of hearing,” here, pitch ultimately has a much higher tolerance for stacking non-inclusive proportions than rhythm.

To conclude, conceiving of pitch and time in terms of their shared acoustical properties (pulses and frequencies) opens several avenues for inter-domain connections to be made. Contributions like double time and octave equivalence, beating, perceived stability, and the different proportional “domains” that pitch and rhythm commonly exemplify all offer valuable insight into further isomorphic models. An approach which focuses solely on proportional relationships between pitch and rhythm ultimately struggles to connect with music.

[Divisive Cyclic Models]

A powerful route opens when considering an abstraction of time in the pitch domain. This paper recalls that frequencies in octave relation are perceived with very high similarities.[15] That is, a frequency value x and its integer multiplications/divisions- i.e. octave transpositions- are nearly interchangeable. This phenomenon is called “pitch class.” Unlike frequencies, which map linearly, pitch classes can be mapped on a circle. To do this, a line is drawn from any frequency x to it’s octave 2x . The ends of this line then wrap together, forming a circle. The frequencies on the line between x and 2x retain their relative distances as they bend around. This circle depicts not the frequency values of points, but proportions/intervals between them. The opposite ends of the circle, which represent the greatest intervallic distance, form an interval of 1 : √2 (tritone). This circle can be demarcated by any number of pitch-classes in any intervallic pattern.

This carries the paper into the second model of pitch/time isomorphisms: cyclic models. In the temporal domain, a cyclic model arises when a pulsation is wrapped onto itself so that a single onset serves as both the beginning and end of a fixed duration cycle. Mentioned earlier, this model draws an isomorphism between the two audible units of each domain: tones (in the form of pitch classes) and onsets. Pitch-classes demarcate points on the pitch-class circle, while onsets demarcate points on the rhythm circle. Isomorphisms can only be drawn from “strong isomorphic” circles; circles with the same number and spacing-pattern of divisions.

There are two hurdles to overcome in drawing this isomorphism. First is the issue of equating onset/offset and tones. Second is the initial disparity between time and tone. The disjunction between onset/offset and pitch is recalled from Figure 1. The audible members of each domain- tones and onset- exist at different levels of abstraction. While evenly-cycling onsets create a repeating duration, pitches (which require two repeating durations) yield a proportion— a tone interval. Essentially, the intervals within the temporal cycle will not have the same values as intervals along the pitch cycle. For the rhythm circle, the opposite ends will feature a ratio of 1 : 2 ; in pitch, 1 : √2 . The paper overlooks this discrepancy when seeking the isomorphism. Instead of attempting to equate the units of the circle, this paper is interested in the patterns of points. The next hurdle, pointed out at the beginning of the paper, is mitigating time across the two models. In a rhythm circle, time always moves in one direction. In a pitch-class circle, time does not exist. This can be easily overcome by using the simple depiction in Figure 7. Consider a ball which can travel along a circumference of the pitch-class or rhythm circle. A point sounds whenever the ball moves onto it. In a pitch class circle, the ball can be stagnant, slide, or skip instantaneously in any direction across the real pitches. However, in a rhythm circle, the ball must continuously move in the same direction and pass between every point along the circle.

Figure 7a

Figure 7b

There are two hurdles to overcome in drawing this isomorphism. First is the issue of equating onset/offset and tones. Second is the initial disparity between time and tone. The disjunction between onset/offset and pitch is recalled from Figure 1. The audible members of each domain- tones and onset- exist at different levels of abstraction. While evenly-cycling onsets create a repeating duration, pitches (which require two repeating durations) yield a proportion— a tone interval. Essentially, the intervals within the temporal cycle will not have the same values as intervals along the pitch cycle. For the rhythm circle, the opposite ends will feature a ratio of 1 : 2 ; in pitch, 1 : √2 . The paper overlooks this discrepancy when seeking the isomorphism. Instead of attempting to equate the units of the circle, this paper is interested in the patterns of points. The next hurdle, pointed out at the beginning of the paper, is mitigating time across the two models. In a rhythm circle, time always moves in one direction. In a pitch-class circle, time does not exist. This can be easily overcome by using the simple depiction in Figure 7. Consider a ball which can travel along a circumference of the pitch-class or rhythm circle. A point sounds whenever the ball moves onto it. In a pitch class circle, the ball can be stagnant, slide, or skip instantaneously in any direction across the real pitches. However, in a rhythm circle, the ball must continuously move in the same direction and pass between every point along the circle.

This connection is quite powerful. In his paper, “Cognitive Isomorphisms between Pitch and Rhythm in World Musics,” Pressing points out 24 pages worth of isomorphisms between two musical cultures: 12-tET in Western music and Sub-Saharan African 12-beat music. Beyond his observations, isomorphisms between expressive musical techniques are also revealed by mapping them into these cycles. Pitch slides and inflections find their counterparts in rubato and tempo stretching. Detunings have their counterpart with rhythmic swing; The direction of swing (relative to the beat) is isomorphic with the with sharp vs. flat detuning (relative to the scale).

The main disparity exemplified by this model is similar to the previous model. Musical rhythm commonly occurs as recursive divisions of the circle through small integer values. Pitch, as in many cultures, does not subdivide the circle recursively. This difference likely arises due to rhythm’s tendency for recursive subdivision, where it is hypothesized that tonal scales (pitch-class sets) arise from a stacked interval.[16]

Ultimately, musical systems which employ equal divisions of a timespan (for rhythm) and an octave (for pitch) are prevalent in a vast number of musical cultures. This model is supported by a broad terrain for making connections from one tradition to another. Cyclic conceptions of music, being so prevalent, provide further platforms for exploration. Perhaps these models could take on a more literal form; a “rhythm-class” cycle or a linear pitch cycle rooted in hertz values.

[Conclusion]

While I have only investigated a few models, there are many more paths to explore. It is possible to venture down additive cyclic models, spiral models, pitch vs. temporal hierarchies, isomorphic serial transformations, temporal equivalent for non-octaviating scales, tonal equivalent for rhythmic integration, etc. In terms of orchestration, it is interesting to consider the ubiquitous tendency for slower frequencies to adopt more stable positions in tonal and temporal musics (slow-moving low tones in percussive music; slow-moving bass notes in harmony). Several benefits arise from the pursuit of this topic. Developing a greater understanding of these two musical domains could likewise advance the understanding of music across cultures. Established isomorphic models could suggest new venues for analysis within the two domains themselves, opening up new terrains for both theorists, composers, and musicologists alike. Both connections and disparities that arise between the temporal and tonal domains work hand-in-hand with the cognitive processes guiding a listener. Addressing these models has the potential to clarify nuances of music perception and cognition.

Note

As a student, I note that my understanding of this subject is still nascent and developing. My only goal in this article is to provide a comprehensive introduction to the subject for artists and analysts alike. The concept of similar musical structures in time and tone has forces the exchange of knowledge across several fields: ethnomusicology, music theory, auditory perception and cognition, and acoustics. This subject demands rigor in the clarity and definition of musical concepts. My hope in this article is to convey the nuances of these musical terms to the reader, suggesting venues for additional investigation.

NOTES.

[1] Ashley, Richard. “Musical Pitch Space Across Modalities- Spatial and Other Mappings Through Language and Culture.” pp. 64–71.

[2] Krumhansl, Carol L. “Rhythm and Pitch in Music Cognition.” Psychological Bulletin, vol. 126, no. 1, 2000, pp. 159–179.

[3] London, Justin. “Hearing in Time.” 2004.

[4] Longstaff, Alan, and Alan Longstaff. “Acoustics and Audition.” Neuroscience, BIOS Scientific Publishers, 2000, pp. 171–184.

[5] Nozaradan, S., et al. “Tagging the Neuronal Entrainment to Beat and Meter.” Journal of Neuroscience, vol. 31, no. 28, 2011, pp. 10234–10240.

[6] Repp, Bruno H. “Perceiving the Numerosity of Rapidly Occurring Auditory Events in Metrical and Nonmetrical Contexts.” Perception & Psychophysics, vol. 69, no. 4, 2007, pp. 529–543.

[7] Vassilakis, Panteleimon Nestor. “Perceptual and Physical Properties of Amplitude Fluctuation and Their Musical Significance.” 2001.

[8] Smoorenburg, Guido F. “Audibility Region of Combination Tones.” The Journal of the Acoustical Society of America, vol. 52, no. 2B, 1972, pp. 603–614.

[9] Pressing, Jeff, et al. “Cognitive Multiplicity in Polyrhythmic Pattern Performance.” Journal of Experimental Psychology: Human Perception and Performance, vol. 22, no. 5, 1996, pp. 1127–1148.

[10] Pitt, Mark A., and Caroline B. Monahan. “The Perceived Similarity of Auditory Polyrhythms.” Perception & Psychophysics, vol. 41, no. 6, 1987, pp. 534–546.

[11] Peper, C. E., et al. “Frequency-Induced Phase Transitions in Bimanual Tapping.” Biological Cybernetics, vol. 73, no. 4, 1995, pp. 301–309.

[12] Handel, Stephen, and James S. Oshinsky. “The Meter of Syncopated Auditory Polyrhythms.” Perception & Psychophysics, vol. 30, no. 1, 1981, pp. 1–9.

[13] Jones, Mari R., and Marilyn Boltz. “Dynamic Attending and Responses to Time.” Psychological Review, vol. 96, no. 3, 1989, pp. 459–491.

[14] Palmer, Caroline, and Susan Holleran. “Harmonic, Melodic, and Frequency Height Influences in the Perception of Multivoiced Music.” Perception & Psychophysics, vol. 56, no. 3, 1994, pp. 301–312.

[15] Deutsch, Diana, and Edward M. Burns. “Intervals, Scales, and Tuning.” The Psychology of Music, Academic Press, 1999, pp. 252–256.

[16] “Errata: Aspects of Well-Formed Scales.” Music Theory Spectrum, vol. 12, no. 1, 1990, pp. 171–171.

Additional References

Pressings, J. “Cognitive Isomorphisms between Pitch and Rhythm in World Musics: West Africa, The Balkans and Western Tonality.” 1975, Pp 38-61.

London, J. “Some Non-Isomorphisms Between Pitch And Time.” Journal of Music Theory, vol. 46, no. 1-2, Jan. 2002, pp. 127–151.

Rahn, John. “On Pitch or Rhythm: Interpretations of Orderings of and in Pitch and Time.” Perspectives of New Music, vol. 13, no. 2, 1975, p. 182.

Stevens, Catherine. “Cross-Cultural Studies of Musical Pitch and Time.” A coustical Science and Technology, vol. 25, no. 6, 2004, pp. 433–438.

Bar-Yosef, Amatzia. “A Cross-Cultural Structural Analogy Between Pitch And Time Organizations.” Music Perception: An Interdisciplinary Journal, vol. 24, no. 3, 2007, pp. 265–280.

Stockhausen, Karlheinz. “Structure and Experimential Time.” Die Reihe, vol. 2, 1958, p. 64.

Stockhausen, Karlheinz, and Elaine Barkin. “The Concept of Unity in Electronic Music.” Perspectives of New Music, vol. 1, no. 1, 1962, p. 39.

Grisey, Gérard. “Tempus Ex Machina:A Composers Reflections on Musical Time.” Contemporary Music Review, vol. 2, no. 1, 1987, pp. 239–275.

Roads, Curtis. Microsound. MIT, 2004.

Zanto, Theodore P., et al. “Neural Correlates of Rhythmic Expectancy.” A dvances in Cognitive

Psychology, vol. 2, no. 2, Jan. 2006, pp. 221–231.

Grahn, Jessica A. “Neural Mechanisms of Rhythm Perception: Current Findings and Future Perspectives.”Topics in Cognitive Science, vol.4, no.4, 2012, pp.585–606.